I'd like to offer some thoughts on how "learning" differs between the new poster child for machine learning - "Convolutional Neural Networks" and my 5 year old daughter, who is learning how to read. If you want to skip the rest of the post, here's the conclusion - fears of AI taking over the world are vastly overblown.

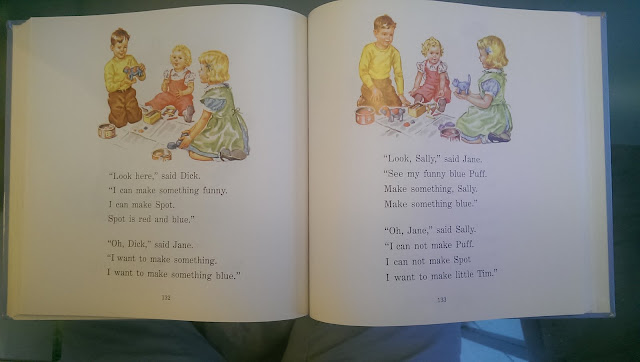

While reading, she started reading words one letter at at time, not restarting for every syllable. Now, when she reads, she groups more letters together and then reads them aloud at at time. Once she has read aloud the written pronunciation, she maps it to colloquial pronunciation with some hesitation. When she understands the context, the hesitation is less. Also, she has now become faster with sight words - familiar words which we can read without reading the spelling. When she began, she wouldn't remember which words she has already read so it would be a new exercise every time. Another interesting observation is that when reading "3-D," she ignored the "-" first, read 3D and then asked, what is the "-."

I showed her a puzzle book where one page had upper case letters printed on tea cups and lower case letters printed on saucers. Simply by looking at the page, she figured out that the goal was to match the upper and lower case forms and she started drawing lines from the tea cups to the saucers. She also noticed, and said aloud, that the since the colors of some of the saucers were the same, you don't have to match by color.

Then we flipped a few pages and found different pictures of two objects on two sides of a scale. Her question was - do I circle the heavier side or the lighter side?

Flip a few more pages and there is a picture of a maze. She exclaimed "Oh I love to do mazes" and traced a path through from a point that she thought was the starting point to the end. She didn't notice the start arrow at first so she simply built a path that made sense to her. Then when I said that start from the arrow, she said "oh" and effortlessly redrew the path.

In the last few days I've learnt about CNNs. I have a DSP background and have known about "traditional" ML methods such as Extended Kalman Filters for a while but CNN was new to me. The hierarchical representation does present a nice conceptual simplification of learning and classification. However, as I think about how rigorous the training procedure is for image classification, compared to how my daughter learns reading, I can safely draw the conclusion that we will be able to harness AI to our benefit. Conscious harm from an AI entity is in the far off future.

And I'll teach her to play Go. Watch out Google.

Later,

Kuntal.